Protocol Buffers and FarSounder

As technical people interested in the "magic" behind the scenes, FarSounder's engineers often are curious about technology behind many of the products we use. Sometimes, some of those companies share a little bit about the choices and technologies that went into their products. We thought we'd do the same and discuss some of FarSounder's behind the scenes technology as it relates to our recently open sourced protobuf-matlab project.

Editor's note: This is a slightly more technical blog post than usual. Though it does not discuss end user features, we think this might be an interesting glimpse to some of our more technical readers.

FarSounder's sonar products generate large amounts of data that moves through our software system in the various stages of processing and display. In order to help ensure software scalability and stability, it is important that our data management infrastructure be well thought out and robustly designed. We use Protocol Buffers (aka "protobuf") for serializing and storing all types of data that we collect when field testing and demonstrating our systems. While the switch to protobuf as our data format is relatively new in FarSounder's history, it has quickly become an integral part of our back-end systems. Since we've derived so much benefit from it, we thought it would be useful to others to discuss why we adopted it, how we use it, and our recent open source release of the protobuf-matlab project.

Some background

Part of developing and improving a sonar system requires collecting and storing a large amount of data from various types of tests. Over the years, FarSounder has collected lots of data that our engineers and scientists use when they are prototyping new algorithms and processing techniques. Effectively utilizing this data for new research and development efforts requires being able to access it from a number of different programming languages and environments.

Early in our history, we relied on a few custom formats for storing our data. Each of these formats had custom written parsers in all the languages we wanted to support. Naturally, it was a bit cumbersome to update the formats and corresponding parsers when we wanted to add some new information to our data-stream. As time went on, it became increasingly difficult to keep using these custom formats because of the increasing number of different versions we had to support to maintain backwards compatibility.

As we started to consider how to improve this system, a number of things became immediately clear:

we wanted to be able to structure our data hierarchically,

the format needed to allow for adding new fields and deprecating old ones while maintaining backwards compatibility with our older formats, and

the parsing code had to be computer generated so we could minimize human error and have uniformity across the different languages we use.

Right about the same time we started formalizing this list of requirements Google open sourced protobuf and it immediately became our top choice; it met almost all the requirements and was a well tested system. The only thing we had to add to it was a compiler for Matlab, as it wasn't an officially supported language (more on this later).

Protobuf in Action

Before we get to how we added Matlab support, lets go over a brief example of how we use Protocol buffers, so you can get a sense if its versatilty. Since there are good protobuf tutorials on the web already, we'll instead focus on a particular type of message that we use for managing arrays of data (we creatively call it `ArrayData`):

// A generic multidimensional array protobuf message. The array data is

// stored as a contiguous array of bytes with type, order, and

// dimensionality as specified by the associated member variables:

message ArrayData {

enum Type {

kByte = 0;

kInt16 = 1;

kUint16 = 2;

kInt32 = 3;

kUint32 = 4;

kInt64 = 5;

kUint64 = 6;

kFloat32 = 7;

kFloat64 = 8;

kComplex64 = 9;

kComplex128 = 10;

}

enum Order {

kRowMajor = 0;

kColumnMajor = 1;

}

repeated int32 dims = 1; // dimensions

optional Type type = 2; // value type

optional Order order = 3; // array order

optional bytes data = 4; // raw binary array data

}Now, suppose you need to store timeseries data from a multi-channel sonar system. You might add a `SonarData` message with an `ArrayData` member for the timeseries:

message SonarData {

optional ArrayData raw_timeseries = 1;

optional string serial_number = 2;

}You can now direct the protobuf compiler to emit parsers in whichever (supported) languages you'd like!

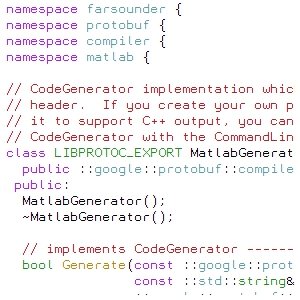

The Protobuf-Matlab Project

As mentioned earlier, one of our requirements was to be able to access our data from Matlab. Since Matlab is not one of the official languages supported by the Protobuf compiler, we developed a plug-in to emit Matlab parsers as a set of .m files. The compiler and resultant parsers have been in use internally at FarSounder for a couple of years now with great success. If you're interested in utilizing this for one of your projects, the protobuf-matlab project's README file has details on setup and use.

More Time for Table Tennis

In summary, by providing a convenient way to offload the issues and work involved in serializing our data, Protocol Buffers has definitely been a big boost to both our productivity and happiness as developers. It's allowed us to focus more on the interesting problems of how to process and utilize our data more effectively, which ultimately leads to a better product... and of course free up some time to play a round of table tennis.